RAID

基础概念

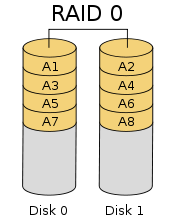

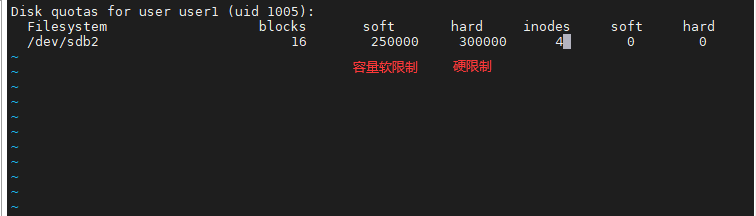

RAID0

无冗余,至少需要两块

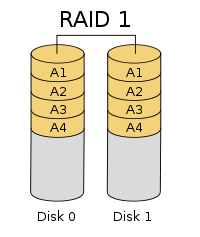

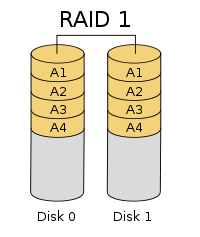

RAID1

使用两块磁盘呈现完全镜像,拥有容错能力,成本高

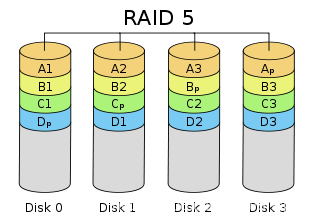

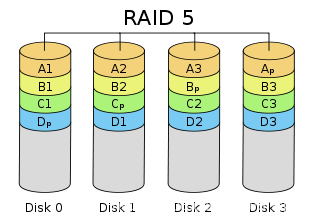

RAID5

校验数据分布在整列的所有磁盘上,有扩展性,当一块磁盘损坏时,可以根据别的同一条带上的其他数据块和对应的校验数据来重建损坏的数据,是较常见的数据保护方案

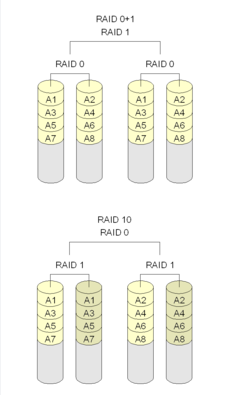

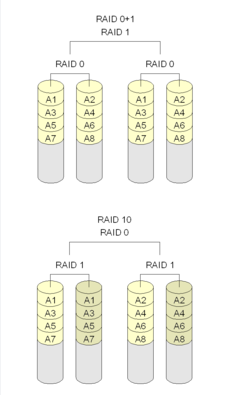

RAID10&RAID01

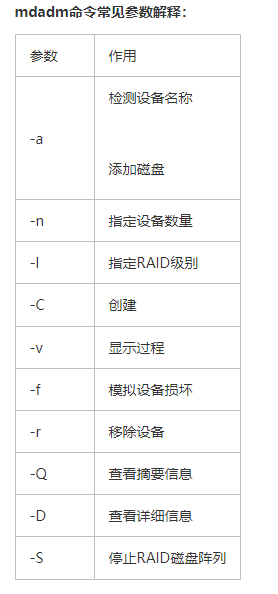

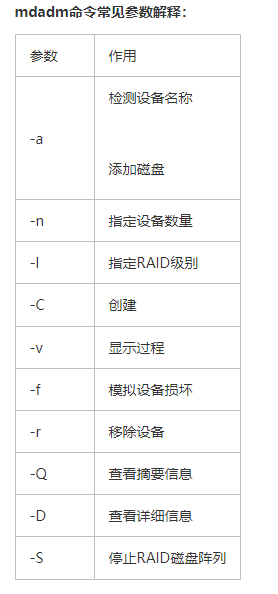

实验

RAID10

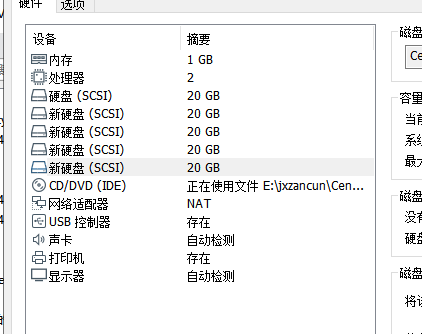

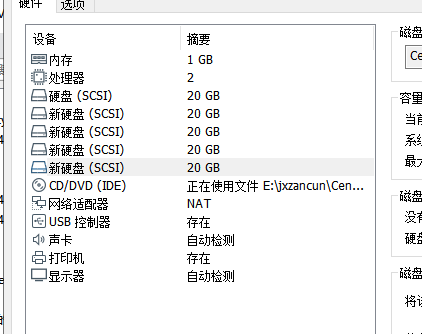

添加四块磁盘

安装mdadm

[root@server1 ~]# mdadm -Cv /dev/md0 -a yes -n 4 -l 10 /dev/sdb /dev/sdc /dev/sdd /dev/sde

mdadm: layout defaults to n2

mdadm: layout defaults to n2

mdadm: chunk size defaults to 512K

mdadm: partition table exists on /dev/sdb

mdadm: partition table exists on /dev/sdb but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdc

mdadm: partition table exists on /dev/sdc but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdd

mdadm: partition table exists on /dev/sdd but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sde

mdadm: partition table exists on /dev/sde but will be lost or

meaningless after creating array

mdadm: size set to 20954112K

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

# 格式化

mkfs.ext4 /dev/md0

#挂载

[root@server1 ~]# mkdir /raid10

[root@server1 ~]# mount /dev/md0 /raid10/

[root@server1 ~]# df -hT

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root xfs 17G 1.2G 16G 7% /

devtmpfs devtmpfs 475M 0 475M 0% /dev

tmpfs tmpfs 487M 0 487M 0% /dev/shm

tmpfs tmpfs 487M 7.7M 479M 2% /run

tmpfs tmpfs 487M 0 487M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 133M 882M 14% /boot

tmpfs tmpfs 98M 0 98M 0% /run/user/0

/dev/md0 ext4 40G 49M 38G 1% /raid10

# 查看挂载信息,写入文件永久挂载

[root@server1 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri Jan 14 15:10:31 2022

Raid Level : raid10

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Fri Jan 14 15:13:28 2022

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Consistency Policy : resync

Name : server1:0 (local to host server1)

UUID : 3d044cfd:cfce3ec6:bd588e7a:3ef0a55e

Events : 27

Number Major Minor RaidDevice State

0 8 16 0 active sync set-A /dev/sdb

1 8 32 1 active sync set-B /dev/sdc

2 8 48 2 active sync set-A /dev/sdd

3 8 64 3 active sync set-B /dev/sde

echo "/dev/md0 /rain10 ext4 defaults 0 0" >> /etc/fstab

损坏及修复

# 模拟损坏

[root@server1 ~]# mdadm /dev/md0 -f /dev/sdb

mdadm: set /dev/sdb faulty in /dev/md0

[root@server1 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri Jan 14 15:10:31 2022

Raid Level : raid10

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Fri Jan 14 15:17:27 2022

State : clean, degraded

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Consistency Policy : resync

Name : server1:0 (local to host server1)

UUID : 3d044cfd:cfce3ec6:bd588e7a:3ef0a55e

Events : 29

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 32 1 active sync set-B /dev/sdc

2 8 48 2 active sync set-A /dev/sdd

3 8 64 3 active sync set-B /dev/sde

0 8 16 - faulty /dev/sdb

# 假装坏哩,买了新的盘关机换好后重启,然后我们把更换的盘重新挂载上去

[root@server1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 20G 0 disk

sdc 8:32 0 20G 0 disk

└─md0 9:0 0 40G 0 raid10 /rain10

sdd 8:48 0 20G 0 disk

└─md0 9:0 0 40G 0 raid10 /rain10

sde 8:64 0 20G 0 disk

└─md0 9:0 0 40G 0 raid10 /rain10

sr0 11:0 1 918M 0 rom

[root@server1 ~]# umount /dev/md0

[root@server1 ~]# mdadm /dev/md0 -a /dev/sdb

mdadm: added /dev/sdb

[root@server1 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri Jan 14 15:10:31 2022

Raid Level : raid10

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Fri Jan 14 15:23:16 2022

State : clean, degraded, recovering

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : near=2

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 16% complete

Name : server1:0 (local to host server1)

UUID : 3d044cfd:cfce3ec6:bd588e7a:3ef0a55e

Events : 39

Number Major Minor RaidDevice State

4 8 16 0 spare rebuilding /dev/sdb

1 8 32 1 active sync set-B /dev/sdc

2 8 48 2 active sync set-A /dev/sdd

3 8 64 3 active sync set-B /dev/sde

raid5+备份

创建RAID5至少要三块硬盘,外加一块备份硬盘,一共4块

[root@server1 ~]# mdadm -Cv /dev/md0 -n 3 -l 5 -x 1 /dev/sdb /dev/sdc /dev/sdd /dev/sde

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 20954112K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

[root@server1 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sun Jan 16 08:57:35 2022

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Jan 16 08:58:34 2022

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 61% complete

Name : server1:0 (local to host server1)

UUID : 181d0cf3:6dbae3e6:d6ab8f42:2653f86c

Events : 10

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

4 8 48 2 spare rebuilding /dev/sdd

3 8 64 - spare /dev/sde

[root@server1 ~]# mkfs.ext4 /dev/md0

mke2fs 1.42.9 (28-Dec-2013)

文件系统标签=

OS type: Linux

块大小=4096 (log=2)

分块大小=4096 (log=2)

Stride=128 blocks, Stripe width=256 blocks

2621440 inodes, 10477056 blocks

523852 blocks (5.00%) reserved for the super user

第一个数据块=0

Maximum filesystem blocks=2157969408

320 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624

Allocating group tables: 完成

正在写入inode表: 完成

Creating journal (32768 blocks): 完成

Writing superblocks and filesystem accounting information: 完成

# 注意我此处用的是临时挂载,长期用fstab

[root@server1 ~]# mkdir /mnt/raid5

[root@server1 ~]# mount /dev/md0 /mnt/raid5/

模拟坏了一块的情形

[root@server1 ~]# mdadm /dev/md0 -f /dev/sdb

mdadm: set /dev/sdb faulty in /dev/md0

[root@server1 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sun Jan 16 08:57:35 2022

Raid Level : raid5

Array Size : 41908224 (39.97 GiB 42.91 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Jan 16 09:02:57 2022

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 24% complete

Name : server1:0 (local to host server1)

UUID : 181d0cf3:6dbae3e6:d6ab8f42:2653f86c

Events : 25

Number Major Minor RaidDevice State

3 8 64 0 spare rebuilding /dev/sde

1 8 32 1 active sync /dev/sdc

4 8 48 2 active sync /dev/sdd

0 8 16 - faulty /dev/sdb

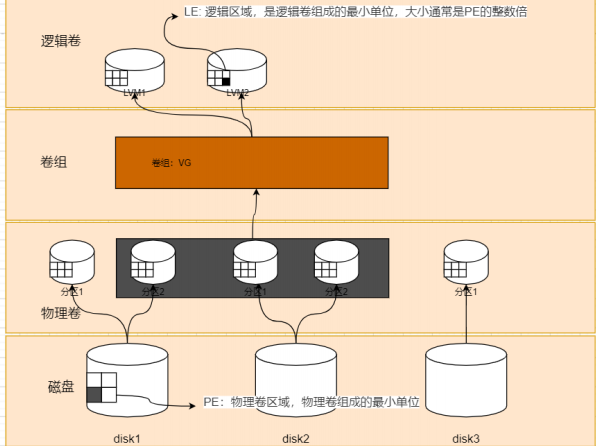

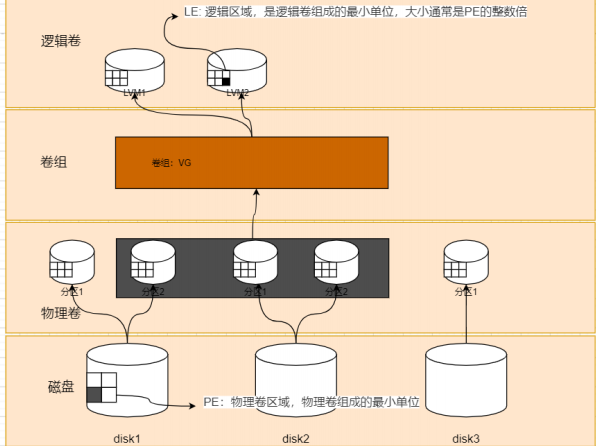

LVM

物理卷:物理磁盘\分区\磁盘整列

卷组:由物理卷构成,一个卷组可以包含多个物理卷,并且创建后依然可以向其中添加物理卷

逻辑卷:在卷组上利用空闲资源建立起来的卷组,是一种虚拟磁盘

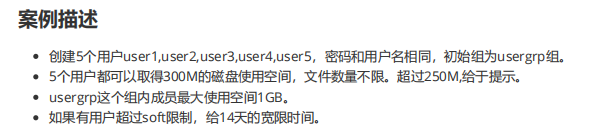

案例

创建逻辑卷、扩容逻辑卷、缩容逻辑卷、拍快照再恢复快照、删除逻辑卷

创建

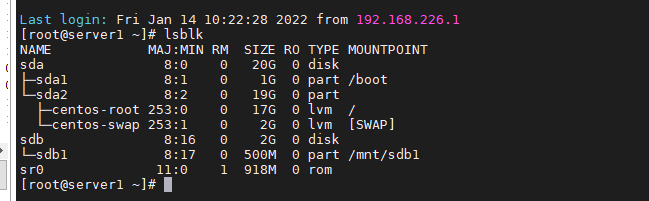

添加两块硬盘,让他支持LVM技术

[root@server1 ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@server1 ~]# pvcreate /dev/sdc

Physical volume "/dev/sdc" successfully created.

把两块硬盘打包成一个卷组,或者使用分区也行,但是不能格式化

[root@server1 ~]# vgcreate vg1 /dev/sdb /dev/sdc

Volume group "vg1" successfully created

[root@server1 ~]# vgdisplay

--- Volume group ---

VG Name centos

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size <19.00 GiB

PE Size 4.00 MiB

Total PE 4863

Alloc PE / Size 4863 / <19.00 GiB

Free PE / Size 0 / 0

VG UUID zyNdps-Jtez-2bvl-LvtC-8EKc-GN8U-a5hEPd

--- Volume group ---

VG Name vg1

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 2

Act PV 2

VG Size 39.99 GiB

PE Size 4.00 MiB

Total PE 10238

Alloc PE / Size 0 / 0

Free PE / Size 10238 / 39.99 GiB

VG UUID EYo89p-6UU4-vnMZ-mPYa-uh6h-tatV-NCK50L

切割逻辑卷设备

切割时有两种选择:

- -L,以容量为单位,如-L 100M

- -l,以基本单元个数为单位,一个基本单元默认4M

[root@server1 ~]# lvcreate -n v1 -L 150M vg1

Rounding up size to full physical extent 152.00 MiB

Logical volume "v1" created.

[root@server1 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/centos/swap

LV Name swap

VG Name centos

LV UUID EcfqJv-Y3c0-ZWpI-D51M-CrsE-zyll-p0QA9R

LV Write Access read/write

LV Creation host, time localhost, 2022-01-12 10:04:09 +0800

LV Status available

# open 2

LV Size 2.00 GiB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:1

--- Logical volume ---

LV Path /dev/centos/root

LV Name root

VG Name centos

LV UUID LF6tdh-zsUY-iWK3-I1FB-DKkS-Xu4S-hdc4st

LV Write Access read/write

LV Creation host, time localhost, 2022-01-12 10:04:10 +0800

LV Status available

# open 1

LV Size <17.00 GiB

Current LE 4351

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:0

--- Logical volume ---

LV Path /dev/vg1/v1

LV Name v1

VG Name vg1

LV UUID 11j9V3-Ho6W-cmrr-23EM-PWJh-Qww5-adyf2u

LV Write Access read/write

LV Creation host, time server1, 2022-01-14 18:38:46 +0800

LV Status available

# open 0

LV Size 152.00 MiB

Current LE 38

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

格式化逻辑卷,挂载

[root@server1 ~]# mkfs.ext4 /dev/vg

vg1/ vga_arbiter

[root@server1 ~]# mkfs.ext4 /dev/vg1/v1

mke2fs 1.42.9 (28-Dec-2013)

文件系统标签=

OS type: Linux

块大小=1024 (log=0)

分块大小=1024 (log=0)

Stride=0 blocks, Stripe width=0 blocks

38912 inodes, 155648 blocks

7782 blocks (5.00%) reserved for the super user

第一个数据块=1

Maximum filesystem blocks=33816576

19 block groups

8192 blocks per group, 8192 fragments per group

2048 inodes per group

Superblock backups stored on blocks:

8193, 24577, 40961, 57345, 73729

Allocating group tables: 完成

正在写入inode表: 完成

Creating journal (4096 blocks): 完成

Writing superblocks and filesystem accounting information: 完成

[root@server1 mnt]# mount /dev/vg1/v1 v_1

[root@server1 mnt]# df -hT

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root xfs 17G 1.2G 16G 7% /

devtmpfs devtmpfs 475M 0 475M 0% /dev

tmpfs tmpfs 487M 0 487M 0% /dev/shm

tmpfs tmpfs 487M 7.7M 479M 2% /run

tmpfs tmpfs 487M 0 487M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 133M 882M 14% /boot

tmpfs tmpfs 98M 0 98M 0% /run/user/0

/dev/mapper/vg1-v1 ext4 144M 1.6M 132M 2% /mnt/v_1

扩容

Linux e2fsck命令用于检查使用 Linux ext2 档案系统的 partition 是否正常工作。

-f强制检查

resize2fs:调整没有挂载的ext系列文件系统的大小

#卸载,扩容

umount /dev/vg1/v1

[root@server1 mnt]# lvextend -L 300M /dev/vg1/v1

Size of logical volume vg1/v1 changed from 152.00 MiB (38 extents) to 300.00 MiB (75 extents).

Logical volume vg1/v1 successfully resized.

#检查完整性,调整文件系统大小

[root@server1 mnt]# e2fsck -f /dev/vg1/v1

e2fsck 1.42.9 (28-Dec-2013)

第一步: 检查inode,块,和大小

第二步: 检查目录结构

第3步: 检查目录连接性

Pass 4: Checking reference counts

第5步: 检查簇概要信息

/dev/vg1/v1: 11/38912 files (0.0% non-contiguous), 10567/155648 blocks

[root@server1 mnt]# resize2fs /dev/vg1/v1

resize2fs 1.42.9 (28-Dec-2013)

Resizing the filesystem on /dev/vg1/v1 to 307200 (1k) blocks.

The filesystem on /dev/vg1/v1 is now 307200 blocks long.

#重新挂载

[root@server1 mnt]# mount /dev/vg1/v1 /

bin/ dev/ home/ lib64/ mnt/ proc/ run/ srv/ tmp/ var/

boot/ etc/ lib/ media/ opt/ root/ sbin/ sys/ usr/

[root@server1 mnt]# mount /dev/vg1/v1 v_1

[root@server1 mnt]# df -hT

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root xfs 17G 1.2G 16G 7% /

devtmpfs devtmpfs 475M 0 475M 0% /dev

tmpfs tmpfs 487M 0 487M 0% /dev/shm

tmpfs tmpfs 487M 7.7M 479M 2% /run

tmpfs tmpfs 487M 0 487M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 133M 882M 14% /boot

tmpfs tmpfs 98M 0 98M 0% /run/user/0

/dev/mapper/vg1-v1 ext4 287M 2.1M 266M 1% /mnt/v_1

缩小

[root@server1 mnt]# umount /dev/vg1/v1

[root@server1 mnt]# e2fsck -f /dev/vg1/v1

e2fsck 1.42.9 (28-Dec-2013)

第一步: 检查inode,块,和大小

第二步: 检查目录结构

第3步: 检查目录连接性

Pass 4: Checking reference counts

第5步: 检查簇概要信息

/dev/vg1/v1: 11/77824 files (0.0% non-contiguous), 15987/307200 blocks

[root@server1 mnt]# resize2fs /dev/vg1/v1 150M

resize2fs 1.42.9 (28-Dec-2013)

Resizing the filesystem on /dev/vg1/v1 to 153600 (1k) blocks.

The filesystem on /dev/vg1/v1 is now 153600 blocks long.

[root@server1 mnt]# lvreduce -L 150M /dev/vg1/v1

Rounding size to boundary between physical extents: 152.00 MiB.

WARNING: Reducing active logical volume to 152.00 MiB.

THIS MAY DESTROY YOUR DATA (filesystem etc.)

Do you really want to reduce vg1/v1? [y/n]: y

Size of logical volume vg1/v1 changed from 300.00 MiB (75 extents) to 152.00 MiB (38 extents).

Logical volume vg1/v1 successfully resized.

[root@server1 mnt]# mount /dev/vg1/v1 v_1

[root@server1 mnt]# df -hT

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root xfs 17G 1.2G 16G 7% /

devtmpfs devtmpfs 475M 0 475M 0% /dev

tmpfs tmpfs 487M 0 487M 0% /dev/shm

tmpfs tmpfs 487M 7.7M 479M 2% /run

tmpfs tmpfs 487M 0 487M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 133M 882M 14% /boot

tmpfs tmpfs 98M 0 98M 0% /run/user/0

/dev/mapper/vg1-v1 ext4 142M 1.6M 130M 2% /mnt/v_1

快照

写个文件作对比

[root@server1 mnt]# echo "hello world" >> v_1/1.txt

[root@server1 mnt]# ls -l v_1/

总用量 14

-rw-r--r--. 1 root root 12 1月 14 19:33 1.txt

drwx------. 2 root root 12288 1月 14 18:40 lost+found

-s创建快照

[root@server1 mnt]# lvcreate -L 150M -s -n KZ1 /dev/vg1/v1

Rounding up size to full physical extent 152.00 MiB

Logical volume "KZ1" created.

[root@server1 mnt]# lvdisplay

--- Logical volume ---

LV Path /dev/centos/swap

LV Name swap

VG Name centos

LV UUID EcfqJv-Y3c0-ZWpI-D51M-CrsE-zyll-p0QA9R

LV Write Access read/write

LV Creation host, time localhost, 2022-01-12 10:04:09 +0800

LV Status available

# open 2

LV Size 2.00 GiB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:1

--- Logical volume ---

LV Path /dev/centos/root

LV Name root

VG Name centos

LV UUID LF6tdh-zsUY-iWK3-I1FB-DKkS-Xu4S-hdc4st

LV Write Access read/write

LV Creation host, time localhost, 2022-01-12 10:04:10 +0800

LV Status available

# open 1

LV Size <17.00 GiB

Current LE 4351

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:0

--- Logical volume ---

LV Path /dev/vg1/v1

LV Name v1

VG Name vg1

LV UUID 11j9V3-Ho6W-cmrr-23EM-PWJh-Qww5-adyf2u

LV Write Access read/write

LV Creation host, time server1, 2022-01-14 18:38:46 +0800

LV snapshot status source of

KZ1 [active]

LV Status available

# open 1

LV Size 152.00 MiB

Current LE 38

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

--- Logical volume ---

LV Path /dev/vg1/KZ1

LV Name KZ1

VG Name vg1

LV UUID XYTTPp-WpPS-F9Hq-qqzD-xUPJ-adpa-kkug2S

LV Write Access read/write

LV Creation host, time server1, 2022-01-14 19:45:49 +0800

LV snapshot status active destination for v1

LV Status available

# open 0

LV Size 152.00 MiB

Current LE 38

COW-table size 152.00 MiB

COW-table LE 38

Allocated to snapshot 0.01%

Snapshot chunk size 4.00 KiB

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

写入个大文件,发现快照卷的存储空间占用率上升了

[root@server1 mnt]# dd if=/dev/zero of=v_1/t1 count=1 bs=100M

记录了1+0 的读入

记录了1+0 的写出

104857600字节(105 MB)已复制,2.14811 秒,48.8 MB/秒

[root@server1 mnt]# lvdisplay

--- Logical volume ---

LV Path /dev/vg1/KZ1

LV Name KZ1

VG Name vg1

LV UUID XYTTPp-WpPS-F9Hq-qqzD-xUPJ-adpa-kkug2S

LV Write Access read/write

LV Creation host, time server1, 2022-01-14 19:45:49 +0800

LV snapshot status active destination for v1

LV Status available

# open 0

LV Size 152.00 MiB

Current LE 38

COW-table size 152.00 MiB

COW-table LE 38

Allocated to snapshot 66.08%

Snapshot chunk size 4.00 KiB

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

恢复快照后,快照卷会自动删除,查看目录发现已经恢复原样

[root@server1 mnt]# umount /dev/vg1/v1

[root@server1 mnt]# lvconvert --merge /dev/vg1/KZ1

Merging of volume vg1/KZ1 started.

vg1/v1: Merged: 82.16%

vg1/v1: Merged: 100.00%

[root@server1 mnt]# mount /dev/vg1/v1

[root@server1 mnt]# mount /dev/vg1/v1 v_1/

[root@server1 mnt]# ls v_1/

1.txt lost+found

为何对原逻辑卷进行修改后快照占有会上升?因为这里采用的是写实拷贝技术,系统在创建快照的时候并不会直接把目标给复制一份,而是先放一个空的,也就是我们一开始看到快照占有非常小的原因,当数据发生修改时,快照才会记录修改部分的原始数据,当恢复快照时,系统会把快照和目标没有修改的部分相结合,得到目标拍摄快照时的状态

删除逻辑卷

[root@server1 mnt]# umount /dev/vg1/v1

[root@server1 mnt]# lvremove /dev/vg1/v1

Do you really want to remove active logical volume vg1/v1? [y/n]: y

Logical volume "v1" successfully removed

[root@server1 mnt]# vgremove vg1

Volume group "vg1" successfully removed

[root@server1 mnt]# pvremove /dev/sdb /dev/sdc

Labels on physical volume "/dev/sdb" successfully wiped.

Labels on physical volume "/dev/sdc" successfully wiped.