热烈庆祝本站打赢了复活赛(

原本因为上学时候低价买的服务器快到期了,外加发现语雀这玩意挺好用,还以为这个站已经失去了历史意义可以光荣退伍了

结果因为种种原因,总之,本站在寄了快大半年后成功的在某小厂的廉价云服务器上复活力!

虽然这个站基本上除了我、爬虫机器人、锲而不舍的钓鱼菜鸟,其他应该没啥人会看

但作为我在这个世界上留下的一点小小的足迹,也作为一种督促

博客道,堂堂连载中!

原本因为上学时候低价买的服务器快到期了,外加发现语雀这玩意挺好用,还以为这个站已经失去了历史意义可以光荣退伍了

结果因为种种原因,总之,本站在寄了快大半年后成功的在某小厂的廉价云服务器上复活力!

虽然这个站基本上除了我、爬虫机器人、锲而不舍的钓鱼菜鸟,其他应该没啥人会看

但作为我在这个世界上留下的一点小小的足迹,也作为一种督促

博客道,堂堂连载中!

环境配置

https://help.aliyun.com/document_detail/315439.html

新版文档(推荐:

https://help.aliyun.com/document_detail/315448.html?spm=a2c4g.11186623.0.0.9a7d5c2aq4loIO

获取access_key_id

https://ram.console.aliyun.com/manage/ak?spm=a2c6h.12873639.article-detail.8.76c06f779m4CWj

获取region_id,记得删尖括号

https://next.api.aliyun.com/api/Rds/2014-08-15/DescribeRegions?lang=PYTHON&tab=DEBUG

添加endpoint,可以在openai的例子里面查看

https://help.aliyun.com/document_detail/315444.html

此处开发一个能够筛选某地域rds当中白名单内含有某条地址的对象,以官方例子为模板做演示讲下思路

思路:拆成两个部分:查询rds实例列表+查询某个rds的白名单,本地环境开发

先来看查询实例列表的api DescribeDBInstances

https://help.aliyun.com/document_detail/26232.html?spm=a2c4g.11186623.0.0.10197aca0hvjOE

点击文档上的调试,可以进入在线调试界面,同时在右侧可以看到自动生成的代码

我们可以看到这里regionid是必填参数,我们以cn-hangzhou为例子填入生成代码

# -*- coding: utf-8 -*-

# This file is auto-generated, don't edit it. Thanks.

import sys

from typing import List

from alibabacloud_rds20140815.client import Client as Rds20140815Client

from alibabacloud_tea_openapi import models as open_api_models

from alibabacloud_rds20140815 import models as rds_20140815_models

from alibabacloud_tea_util import models as util_models

from alibabacloud_tea_util.client import Client as UtilClient

class Sample:

def __init__(self):

pass

@staticmethod

def create_client(

access_key_id: str,

access_key_secret: str,

) -> Rds20140815Client:

"""

使用AK&SK初始化账号Client

@param access_key_id:

@param access_key_secret:

@return: Client

@throws Exception

"""

#client的配置,client的初始化在下面的函数中实现

#https://help.aliyun.com/document_detail/315449.html

config = open_api_models.Config(

# 必填,您的 AccessKey ID,

access_key_id=access_key_id,

# 必填,您的 AccessKey Secret,

access_key_secret=access_key_secret

)

# 访问的域名

config.endpoint = f'rds.aliyuncs.com'

return Rds20140815Client(config)

@staticmethod

def main(

args: List[str],

) -> None:

# 工程代码泄露可能会导致AccessKey泄露,并威胁账号下所有资源的安全性。以下代码示例仅供参考,建议使用更安全的 STS 方式,更多鉴权访问方式请参见:https://help.aliyun.com/document_detail/378659.html

#实例化client

client = Sample.create_client('accessKeyId', 'accessKeySecret')

#初始化request,api自己的各种参数都加在这里

describe_dbinstances_request = rds_20140815_models.DescribeDBInstancesRequest(

region_id='cn-hangzhou'

)

#新版 SDK 的超时机制为 RuntimeOption -> Config 设置 -> 默认

runtime = util_models.RuntimeOptions()

try:

# 复制代码运行请自行打印 API 的返回值

#client带有所有openapi,可以通过client直接调用,此处是带参式调用

#https://help.aliyun.com/document_detail/315453.html

client.describe_dbinstances_with_options(describe_dbinstances_request, runtime)

except Exception as error:

# 如有需要,请打印 error

UtilClient.assert_as_string(error.message)

@staticmethod

async def main_async(

args: List[str],

) -> None:

# 工程代码泄露可能会导致AccessKey泄露,并威胁账号下所有资源的安全性。以下代码示例仅供参考,建议使用更安全的 STS 方式,更多鉴权访问方式请参见:https://help.aliyun.com/document_detail/378659.html

client = Sample.create_client('accessKeyId', 'accessKeySecret')

describe_dbinstances_request = rds_20140815_models.DescribeDBInstancesRequest(

region_id='cn-hangzhou'

)

runtime = util_models.RuntimeOptions()

try:

# 复制代码运行请自行打印 API 的返回值

await client.describe_dbinstances_with_options_async(describe_dbinstances_request, runtime)

except Exception as error:

# 如有需要,请打印 error

UtilClient.assert_as_string(error.message)

if __name__ == '__main__':

Sample.main(sys.argv[1:])当你调用了这个api,他便会返回给你一个长得像json格式的结果,不过本地调用跟网页调用结果有点不一样

这是网页调用出来的结构

{

"TotalRecordCount": 0,

"PageRecordCount": 0,

"RequestId": "B08AAD66-63A2-5432-9C62-3721ADA6DE8C",

"NextToken": "",

"PageNumber": 1,

"Items": {

"DBInstance": []

}

}如果是本地调用,外面会再套一层壳,类型为阿里云自己的一种对象,如果你想取出其中的一项值,直接用.去取就行

比如说,我想把items当中的dbinstance中的实例id和实例名称给取出来

#结果是个对象

list = {}

ret1 = client.describe_dbinstances_with_options(describe_dbinstances_request, runtime)

ret2 = ret1.body.items.dbinstance #实例列表,返回结果里面有响应头header和body两层,我们要的数据在body

for item in ret2:

list[item.dbinstance_id]=item.dbinstance_description具体有哪些结构、要取出哪些,可以对比输出结果和文档,注意小写和下划线

这时你可能注意到这个api的一个说明

本接口支持如下两种方式查看返回数据:

说明

上述两种方式只能任选其一。当返回的记录数较多时,推荐使用方式一,可以获得更快的查询速度

意思很明显,虽然不知道这家伙是怎么想的,总之你不能一次性列出所有的实例了(除非你的实例数小于100),那么要怎样才能翻页、列出所有的数据呢,我们来看看这里面提到的几个参数

看下我们刚才打印出来的结果,你会注意到里面有个东西叫做NextToken,如果你使用网页调试,你也可以在参数配置里面看到翻页参数这一类,很显然,我们得要手动输入nexttoken才能翻页,这东西的实现方式就是在你刚才调用的api里面加上这个参数(而且这个参数似乎空值会报错),那么我们可以另外在写一个函数,在原来的基础上给他加上这个参数,然后在脚本里面写一个判断,当我们的结果列表长度等于我们设置的一页的结果个数时,就调用一下我们这个翻页函数

#核心部分

describe_dbinstances_request = rds_20140815_models.DescribeDBInstancesRequest(

region_id='cn-hangzhou',

max_results=100, #别忘了在第一次调用的函数里面也加上这个参数

next_token=token #传参,当然,记得保留上次调用结果的token,取的方式同上

)现在,我们拥有了一个包含所有实例id和名称的字典,我们来查看他们当中谁满足我们的条件:去调用白名单api来完成这一任务

https://help.aliyun.com/document_detail/26241.html

这api肉眼可见的简单,只需要传入一个dbinstanceid就可以查询出我们想要的结果,你如果想偷懒可以随便填个例子生成代码然后再在那基础上面修改,至于返回的结果,处理方式也同上,后面也就不再多说,字符串处理str.find,结果可以用csv库输出成文件保存

k8s cm在不配置subpath的情况下,当你更新挂载的文件内容的时候,也会同步更新到容器内部的文件(会有时延)类似prometheus等应用可以在文件更新后自动热加载新配置文件,但是诸如nginx\apache等应用并不会自己去加载文件reload,但这类应用往往支持通过信号的形式来控制,这时可以为其配一个sidecar容器以帮助其实现自动更新

参考文档:https://blog.fleeto.us/post/refresh-cm-with-signal/

https://www.jianshu.com/p/57e3819a2e7c

https://jimmysong.io/kubernetes-handbook/usecases/sidecar-pattern.html

https://izsk.me/2020/05/10/Kubernetes-deploy-hot-reload-when-configmap-update/

sidecar:全称sidecar proxy,为某种为了给应用程序提供补充功能而运行的单独的进程,通常以容器的形式和应用容器处于同一pod内,它可以在无需为应用程序添加额外的第三方组件的情况下为应用程序添加功能或者修改程序的代码和配置,目前已有较多成功的使用示例,如prometheus的社区开源高可用方案thaos

原理:使用inotify去监控config的变化,当发生改变的时候,使用信号去通知nginx加载配置文件,对于nginx来说可以使用nginx -s或kill来实现,需要注意两者之间信号相互的映射关系

http://io.upyun.com/2017/08/19/nginx-signals/

http://www.wuditnt.com/775/

-s signal Send a signal to the master process. The argument signal can be one of: stop, quit, reopen, reload.

The following table shows the corresponding system signals:

stop SIGTERM

quit SIGQUIT

reopen SIGUSR1

reload SIGHUP

SIGNALS

The master process of nginx can handle the following signals:

SIGINT, SIGTERM Shut down quickly.

SIGHUP Reload configuration, start the new worker process with a new configuration, and gracefully shut

down old worker processes.

SIGQUIT Shut down gracefully.

SIGUSR1 Reopen log files.

SIGUSR2 Upgrade the nginx executable on the fly.

SIGWINCH Shut down worker processes gracefully.准备监听脚本

#!/bin/sh

while :

do

# 获取文件名称

REAL=`readlink -f ${FILE}`

# 监控指定事件

inotifywait -e delete_self "${REAL}"

# 获取特定进程名称的 PID

PID=`pgrep ${PROCESS} | head -1`

# 发送信号

kill "-${SIGNAL}" "${PID}"

#nginx -s reload

done准备sidecar

FROM alpine

RUN apk add --update inotify-tools

ENV FILE="/etc/nginx/conf.d/" PROCESS="nginx" SIGNAL="hup"

COPY inotifynginx.sh /usr/local/bin

CMD ["/usr/local/bin/inotifynginx.sh"]准备yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-test

spec:

replicas: 1

selector:

matchLabels:

app: nginx-test

template:

metadata:

labels:

app: nginx-test

spec:

shareProcessNamespace: true

containers:

- name: "nginx-test"

image: nginx

ports:

- name: httpd

containerPort: 8888

protocol: TCP

volumeMounts:

- mountPath: /etc/nginx/conf.d/

name: nginx-config

readOnly: true

- mountPath: /usr/share/nginx/

name: nginx-file

- name: "inotify"

image: inotify:v1

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d/

readOnly: true

volumes:

- name: nginx-config

configMap:

name: nginx-config

- name: nginx-file

hostPath:

path: /tmp/nginx

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service-test

spec:

selector:

app: nginx-test

type: NodePort

ports:

- port: 8888

targetPort: 8888

nodePort: 31111

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

labels:

app: nginx-test

data:

test1.conf: |

server{

listen *:8888;

server_name www.test1.xyz;

location /

{

root /usr/share/nginx/;

index test1.html;

}

}pod中的容器间共享进程命名空间https://kubernetes.io/zh-cn/docs/tasks/configure-pod-container/share-process-namespace/,需要注意的是并不是所有的容器都适合这样的方法来实现,具体详情可以参考连接

测试

[root@worker-node01 ~]# curl 10.99.31.106:8888

test1

#修改cm中的index

[root@worker-node01 ~]# kubectl get cm

NAME DATA AGE

confnginx 1 4d1h

kube-root-ca.crt 1 24d

nginx-config 1 48s

[root@worker-node01 ~]# kubectl edit nginx-config

error: the server doesn't have a resource type "nginx-config"

[root@worker-node01 ~]# kubectl edit cm nginx-config

configmap/nginx-config edited

#可以发现nginx完成了自动热加载

[root@worker-node01 ~]# curl 10.99.31.106:8888

test2k8s的探针支持三种检测方式

在本篇中以jenkins的官方yaml为演示例子

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: devops-tools

spec:

replicas: 1

selector:

matchLabels:

app: jenkins-server

template:

metadata:

labels:

app: jenkins-server

spec:

securityContext:

fsGroup: 1000 #附属组1000

runAsUser: 1000 #容器内所有进程都以 ID 1000来运行

serviceAccountName: jenkins-admin #为这个应用设定上面创建的那个账号

containers:

- name: jenkins

image: jenkins/jenkins:lts

resources: #资源限制

limits:

memory: "2Gi"

cpu: "1000m"

requests:

memory: "500Mi"

cpu: "500m"

ports:

- name: httpport

containerPort: 8080

- name: jnlpport

containerPort: 50000

livenessProbe: #存活探针

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 90

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 5

readinessProbe: #就绪探针

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

volumeMounts:

- name: jenkins-data

mountPath: /var/jenkins_home

volumes:

- name: jenkins-data

persistentVolumeClaim:

claimName: jenkins-pv-claim存活探针会定期去查看容器内的应用是否还或者,如果死球了,他将会去试着重启容器

因探针导致的重启将由node上的kubelet来负责,master上的conrtol plane不会去处理

livenessProbe: #存活探针

httpGet: #使用get的方式

path: "/login" #路径

port: 8080 #端口

initialDelaySeconds: 90 #在第一次探测前应该等待90s

periodSeconds: 10 #每十秒检测一次

timeoutSeconds: 5 #探测超时后等待多久

failureThreshold: 5 #探测失败多少次后触发处理动作我们试着通过手动的方式来测试下这个探针

[root@master ~]# kubectl get pods -n devops-tools

NAME READY STATUS RESTARTS AGE

jenkins-559d8cd85c-qg9zx 1/1 Running 0 15d

[root@master ~]# kubectl exec -it -n devops-tools jenkins-559d8cd85c-qg9zx -c jenkins -- bash

jenkins@jenkins-559d8cd85c-qg9zx:/$ ls -l /proc/*/exe

lrwxrwxrwx 1 jenkins jenkins 0 Jan 13 08:51 /proc/1/exe -> /sbin/tini

lrwxrwxrwx 1 jenkins jenkins 0 Jan 29 03:06 /proc/3465/exe -> /bin/bash

lrwxrwxrwx 1 jenkins jenkins 0 Jan 29 05:08 /proc/3515/exe -> /bin/bash

lrwxrwxrwx 1 jenkins jenkins 0 Jan 13 08:51 /proc/7/exe -> /opt/java/openjdk/bin/java

lrwxrwxrwx 1 jenkins jenkins 0 Jan 29 05:08 /proc/self/exe -> /bin/ls

jenkins@jenkins-559d8cd85c-qg9zx:/$ kill -9 /opt/java/openjdk/bin/java

bash: kill: /opt/java/openjdk/bin/java: arguments must be process or job IDs

jenkins@jenkins-559d8cd85c-qg9zx:/$ kill -9 7

jenkins@jenkins-559d8cd85c-qg9zx:/$ command terminated with exit code 137

[root@master ~]# kubectl get pods -n devops-tools

NAME READY STATUS RESTARTS AGE

jenkins-559d8cd85c-qg9zx 0/1 Running 1 (19s ago) 15d

#等一会

[root@master ~]# kubectl get pods -n devops-tools

NAME READY STATUS RESTARTS AGE

jenkins-559d8cd85c-qg9zx 1/1 Running 1 (119s ago) 15d

[root@master ~]# kubectl describe pod jenkins-559d8cd85c-qg9zx -n devops-tools

Name: jenkins-559d8cd85c-qg9zx

Namespace: devops-tools

Priority: 0

Node: worker-node01/172.30.254.88

Start Time: Fri, 13 Jan 2023 16:51:36 +0800

Labels: app=jenkins-server

pod-template-hash=559d8cd85c

Annotations: <none>

Status: Running

IP:

IPs:

IP:

Controlled By: ReplicaSet/jenkins-559d8cd85c

Containers:

jenkins:

Container ID: docker://d7342bb100c43d330bfb84b042b4a13317d6fe5ec82c6c44c90dd90502d1dacf

Image: jenkins/jenkins:lts

Image ID: docker-pullable://jenkins/jenkins@sha256:c1d02293a08ba69483992f541935f7639fb10c6c322785bdabaf7fa94cd5e732

Ports: 8080/TCP, 50000/TCP

Host Ports: 0/TCP, 0/TCP

State: Running

Started: Sun, 29 Jan 2023 13:12:38 +0800

Last State: Terminated #中断

Reason: Error

Exit Code: 137 #137意味着128+x,x是SIGKILL信号,在这里是9,表示这个进程被强行终止了

Started: Fri, 13 Jan 2023 16:51:37 +0800

Finished: Sun, 29 Jan 2023 13:12:37 +0800

Ready: True

Restart Count: 1就绪探针会定期去访问容器内部,当容器准备的就绪探测返回成功时,就代表容器已经做好了接受请求的准备

一个程序怎样情况下表示就绪是设计他的程序员的问题,k8s不过是简单的去戳一下容器里面被设定好的路径来看一下返回状态罢了

启动容器时,可以设置一个等待时间,等过了这个设定的时间后,k8s才会去执行第一次探测

与存活不同的是 ,就绪探针的失败并不会使得容器重启,但是一个就绪探测失败的pod将无法继续接收请求,但是这个特性也很适合那些需要很长时间才能启动就绪服务--如果这样的一个服务刚被布上去还没准备完毕,就被转发来了流量,那客户端将会收获一堆报错

readinessProbe: #就绪探针

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 60 #启动等待

periodSeconds: 10 #间隔

timeoutSeconds: 5 #超时时间

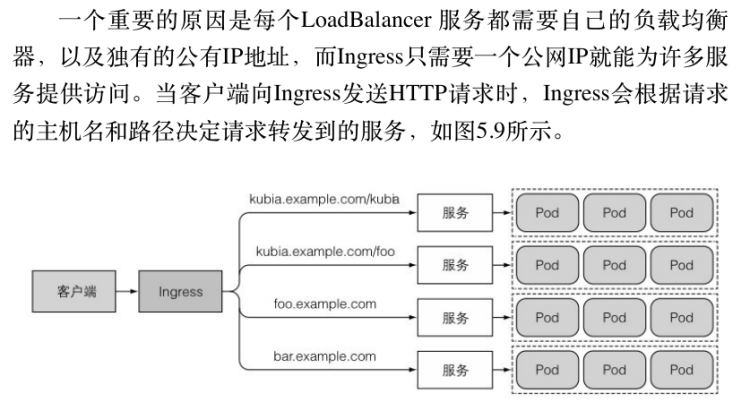

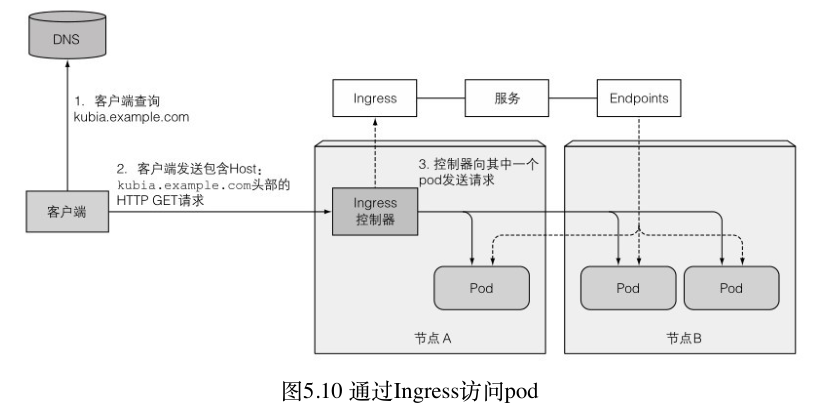

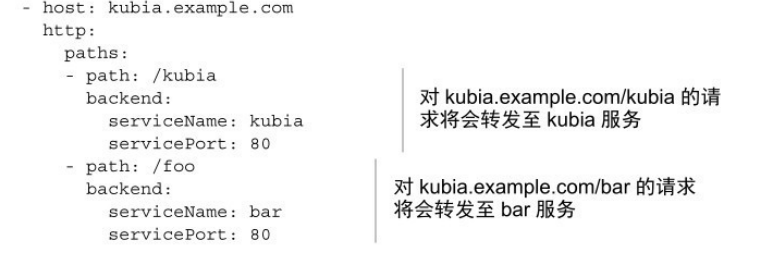

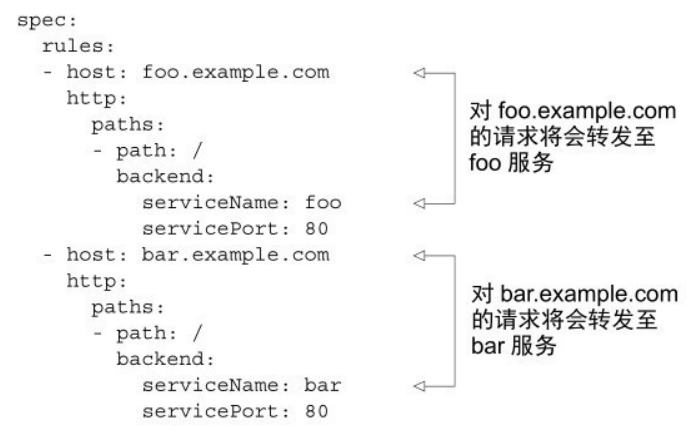

failureThreshold: 3 #失败次数ingress为集群提供基于域名+path的负载均衡,相较于lb,其只需要一个ip就可以为多个服务提供功能

不同环境下的k8s所使用的ingress是不同的,一般各个云厂商都会提供自家独有的插件,你可以在他们官网的手册上来了解相关的信息和使用方法,我们这里是自建集群,使用ingress-nginx作为ingress controller

https://kubernetes.github.io/ingress-nginx/

你可以先kubectl get pods --all-namespaces看下你有没有安装ingress controller,如果没有(按照我之前教程安装的集群肯定没有)

去github下载helm chart或者直接yaml

以yaml为例子,附件放在文末

[root@master ~]# sed -i '[email protected]/ingress-nginx/controller:v1.0.0\(.*\)@willdockerhub/ingress-nginx-controller:v1.0.0@' ingresscontroller.yaml

[root@master ~]# sed -i '[email protected]/ingress-nginx/kube-webhook-certgen:v1.0\(.*\)$@hzde0128/kube-webhook-certgen:v1.0@' ingresscontroller.yaml

[root@master ~]# kubectl apply -f ingresscontroller.yaml

#看到下面这样的就是正常的

[root@master ~]# kubectl get po -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-xgl99 0/1 Completed 0 10m

ingress-nginx-admission-patch-vjxgm 0/1 Completed 1 10m

ingress-nginx-controller-6b64bc6f47-2lbvn 1/1 Running 0 10mingress的工作过程

客户端访问域名--dns返回ingress ip--客户端向ingress发送http请求--ingress根据包中的host去与该服务相关的services的endpoints中查询pod ip,并把请求发给其中一个pod

在创建Ingress前,你还是得要为你的应用准备deploy\service等资源

一个最简单的ingress长这样

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

spec:

rules: #可以看到rules和paths都是复数,代表可以根据host和path支持多组映射

- host: test.example.com #ingress将你的服务映射到这个域名

http:

paths:

- path: /testpath

backend:

serviceName: test #将所有流量发送到test这个service

servicePort: 80 #的80端口

pathType:

#也可写成

backend:

service:

name: myservicea

port:

number: 80

pathType:

Ingress 中的每个路径都需要有对应的路径类型(Path Type)。未明确设置 pathType 的路径无法通过合法性检查。当前支持的路径类型有三种:

多path的映射

多host的映射

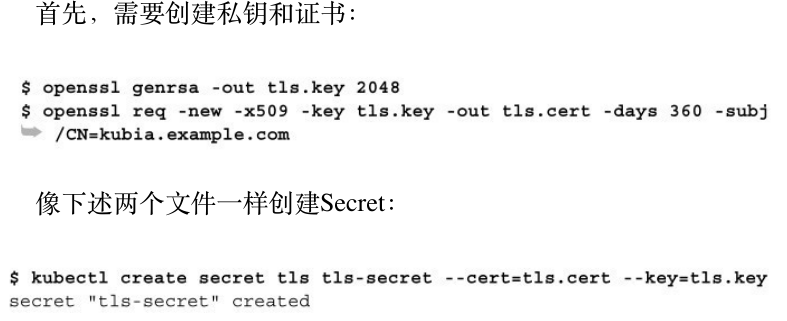

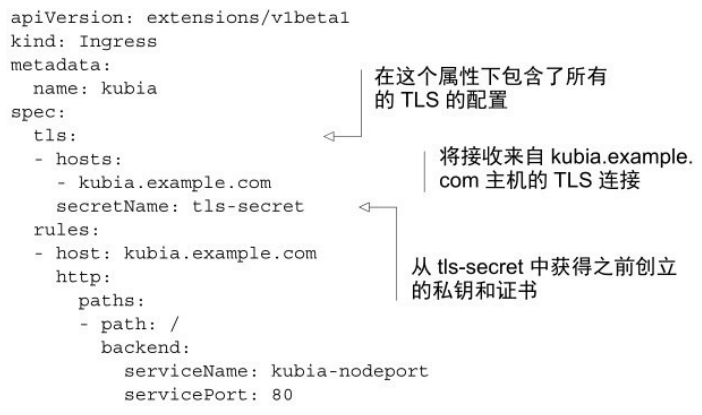

配置TLS

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: k8s.gcr.io/ingress-nginx/controller:v1.0.0@sha256:0851b34f69f69352bf168e6ccf30e1e20714a264ab1ecd1933e4d8c0fc3215c6

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0@sha256:f3b6b39a6062328c095337b4cadcefd1612348fdd5190b1dcbcb9b9e90bd8068

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0@sha256:f3b6b39a6062328c095337b4cadcefd1612348fdd5190b1dcbcb9b9e90bd8068

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000